Edgevolution: Unleashing the Power of Kubernetes Clusters for a Revolutionary Edge Computing Experience

Kubernetes at the edge extends the powerful container orchestration capabilities of Kubernetes to decentralized environments. It enables the efficient management and deployment of containerized applications on edge devices, reducing latency, enhancing scalability, and supporting real-time processing closer to data sources.

Introduction to Edge Infrastructure

In the context of cloud computing, an edge infrastructure refers to a distributed computing architecture that brings computational resources closer to the geographical location where data is generated, processed, and consumed. Traditional cloud computing models often involve centralized data centres located at a considerable distance from end-users. In contrast, edge computing aims to reduce latency and improve performance by decentralizing computing resources and moving them closer to the "edge" of the network.

Key characteristics of edge infrastructure in the cloud computing landscape include proximity to End-Users, the typical advantages of a Distributed Architecture, and Decentralized Processing, as well as Real-time Processing, which concur with Bandwidth Optimization.

Last but not least, edge infrastructure brings Resilience and Redundancy, introducing Support for Mobile and IoT Devices. In the Cloud Native world, Edge infrastructure is often integrated with traditional cloud services: While edge computing handles localized processing, the central cloud can be used for tasks such as long-term storage, analytics, and coordination across the entire system.

Overall, edge infrastructure in the cloud computing landscape is a paradigm shift that complements traditional cloud models, addressing the need for low-latency, high-performance computing at the network periphery where data is generated and consumed. It is a key enabler for emerging technologies and applications that demand real-time processing and responsiveness, where Kubernetes can play a relevant role.

The role of Kubernetes in the Edge

Kubernetes on edge infrastructures offers several benefits that make it a valuable solution for managing and orchestrating applications in distributed environments. Edge computing involves processing data closer to the source of data generation rather than relying on a centralized data-processing warehouse. Besides the well-known benefits offered by traditional Kubernetes infrastructures, by running Kubernetes in edge infrastructures organizations can achieve Resource Efficiency, by working as infrastructure glueing to address Edge Device Heterogeneity, enabling an efficient Edge Data Processing, a strategy which consists of analysing and process data locally before sending relevant information to the central cloud: this can be especially beneficial for applications that require real-time processing and decision-making.

The resource-burden of Kubernetes

Edge Infrastructures are typically small data centres, where there is a limited amount of available resources: in a nutshell, edge computing is all about resource-constrained environments, presenting several challenges in running Kubernetes in a high-available way.

The Kubernetes Control Plane is the brain of the cluster, a resource-intensive set of workloads due to its core components like the API server, the controller manager, and the scheduler, which handle critical orchestration and management tasks.

Furthermore, a single instance of the Control Plane is not enough to guarantee the High Availability requirements, therefore a minimum of 3 instances is required to ensure the business continuity of the API Server, which doesn’t fit well in a constrained environment such as Edge deployments.

Offloading the Kubernetes control plane and consuming it as-a-Service

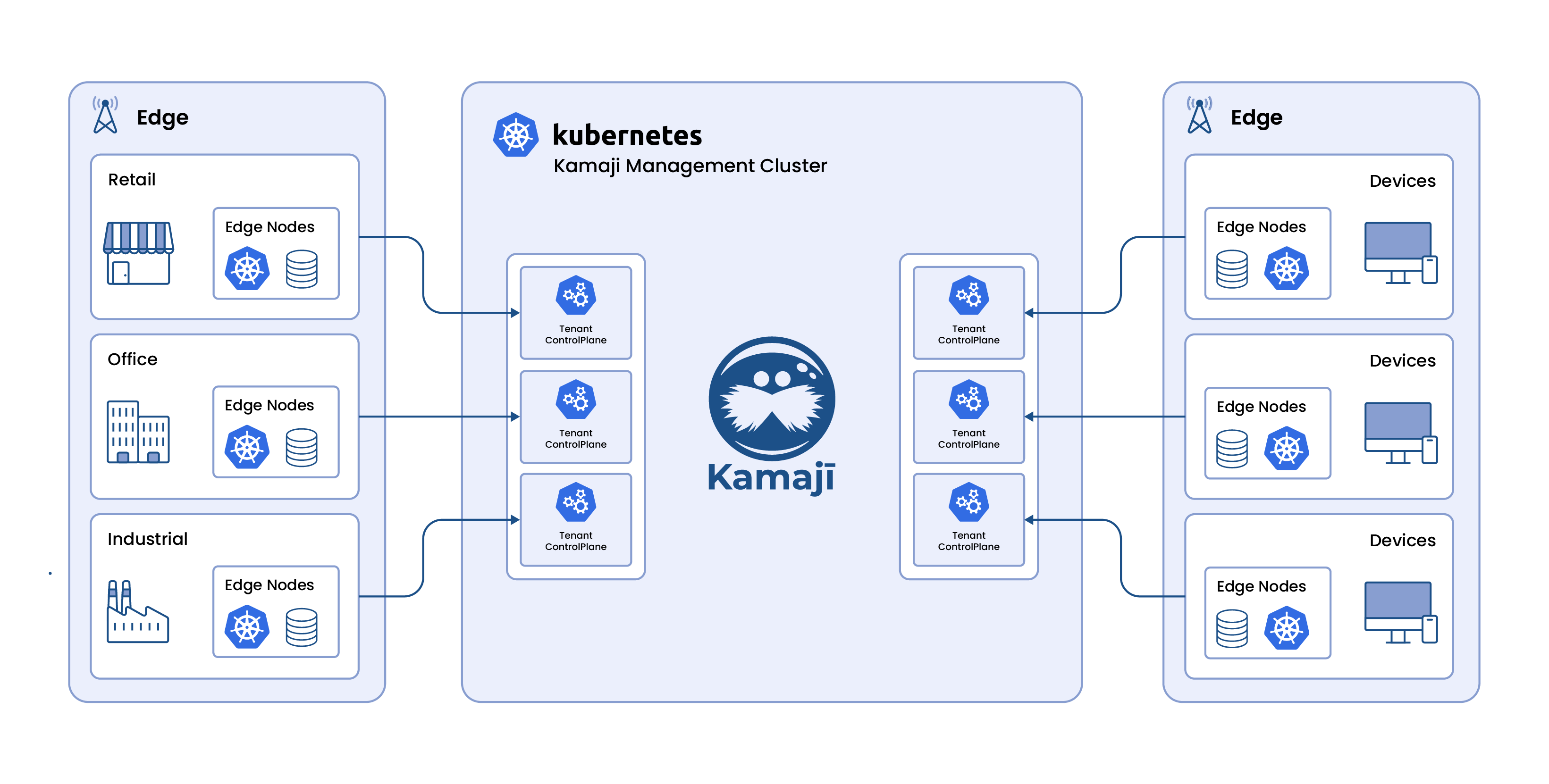

Kamaji is the reference project in running Hosted Kubernetes Control Planes, an innovative and disruptive way of consuming Kubernetes services and offloading the operational burden to a central management platform.

In the reference architecture we’re presenting, a Management Cluster is responsible for hosting several Kubernetes Control Planes powered by Kamaji, which is responsible for their maintenance and high availability: if you would like to know more about the benefits offered by Kamaji, please, check out our previous blog post.

Since the Kubernetes Control Plane is managed by the Management Cluster, Edge Locations are responsible for providing compute workloads, such as single (or a pair of) compute nodes. These will perform the registration to their assigned Kubernetes Control Plane provided by Kamaji: in a nutshell, edge location compute power will be entirely allocated to the applications hosted on the location, simplifying the operations since the Control Plane will be offloaded externally and kept up-and-running by Kamaji.

The communication between the compute nodes and the Tenant Control Planes is made possible even in the case the first ones don't have a public IP, required to allow the Kubernetes API Server to fetch logs, extract metrics, and execute commands into Pods' containers: Kamaji is able to provision and configure a network component name Konnectivity which will create a reverse tunnel from the worker nodes to the API Server, allowing the required communication even network setups burdened by the NAT.

Configuring Konnectivity, as mentioned in the official documentation, requires several error-prone tasks, as well as customization of the Kubernetes API Server with several flags, volumes, and config files: with Kamaji, this component can be enabled easily by defining it on the Tenant Control Plane specification, and all the dull task will be performed by Kamaji on your behalf, streamlining your Day-2 operations.

This architecture brings several advantages, both from the hardware and software standpoint.

Containerized Control Plane: running the Kubernetes control plane as pods allows for a containerized and modular approach. This can simplify deployment, updates, and maintenance, making it well-suited for edge environments with limited resources and a need for efficient resource utilization.

Resource Efficiency: containerizing the control plane components helps optimize resource usage by allowing more granular control over resource allocation. This is especially beneficial in edge infrastructure scenarios where resources in edge locations may be constrained.

Simplified Management: the Kamaji-based control plane containerization enables a single plane of glass interface for simplified management. It means having a centralized application delivery, monitoring, and troubleshooting, easing the operational burden on administrators and offering a unique 10k foot view of your distributed infrastructure, making the edge locations compute nodes as real cattle rather than pets.

Cost-Effective: leveraging the Kamaji solution for Edge Infrastructures can be cost-effective for edge deployments, as our recent survey noticed an increase of 60% in resource optimization by running containerized Kubernetes Control Planes rather than the traditional approach of VMs.

How CLASTIX can help in addressing Kubernetes at the Edge

As we embark on the journey of transforming edge computing with Kubernetes, the vision of a seamlessly orchestrated environment becomes paramount. In this blog post, we've explored the game-changing benefits of running Kubernetes on the edge, empowered by the simplicity and control offered through a single pane of glass management platform.

At the forefront of this revolution is CLASTIX Platform, our cutting-edge product designed to elevate your edge infrastructure experience. With CLASTIX Platform, managing Kubernetes on the edge becomes a breeze — from centralized orchestration to resource optimization and beyond. Our commitment to providing robust support aligns with your vision of a resilient, high-performance edge ecosystem.

Discover a new era of edge computing with Kubernetes, where CLASTIX Platform empowers you to harness the full potential of your distributed environment. Join us as we redefine the boundaries of possibility in edge computing and embark on a journey towards unparalleled efficiency, scalability, and success.