Clastix launches Kamaji a new open source tool for Managed Kubernetes Service

At CLASTIX, we're proud to announce Kamaji a new open-source tool to build and operate Managed Kubernetes Services with unprecedented ease.

Kubernetes adoption has grown rapidly. A recent report by the Cloud Native Computing Foundation (CNCF) found that more than 96% of organizations are either using or evaluating Kubernetes – a record high since early surveys began in 2016. With this de-facto status, Kubernetes is now going “under the hood” similar to Linux, with more organizations leveraging managed services and packaged platforms from Cloud and Service providers.

Global hyper-scalers such as AWS, Azure, GCP, and a few others are leading this market, while regional cloud providers, as well as large corporations, are struggling to offer the same level of experience to their developers because of the lack of the right tools. Also, the Kubernetes solutions for the on-premises are designed for an enterprise-first approach and they are too costly when deployed at scale, requiring a management effort that is not competitive. Project Kamaji aims to solve this pain by leveraging multi-tenancy and simplifying how to run clusters at scale with a fraction of the operational burden.

Anatomy of a Managed Kubernetes Service

One of the most structural choices while building a managed Kubernetes service is how to deploy, manage, and operate multiple clusters at scale. As all these clusters must be: resilient, isolated, and cost-optimised, managing them can put too much pressure on the Ops teams.

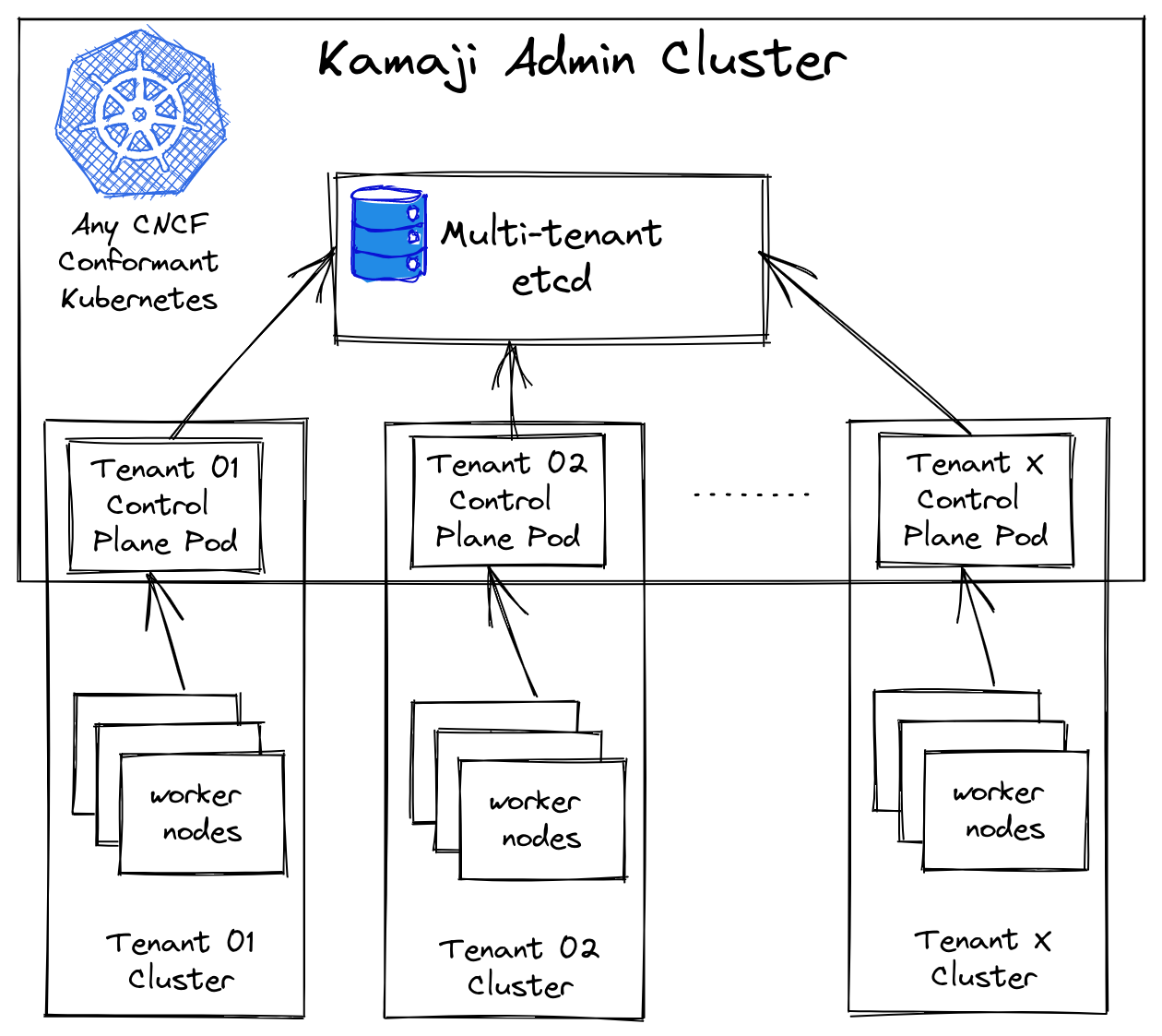

So, at Clastix, we went with the idea to use Kubernetes itself to orchestrate other Kubernetes clusters. This idea, also known as “kube inception”, is not a new idea, but we’re just trying to move it to the next level. To achieve this goal, Kamaji turns any conformant Kubernetes cluster into an “admin cluster” to orchestrate other Kubernetes clusters called “tenant clusters”.

As with every Kubernetes cluster, the tenant clusters have a set of nodes and a control plane, composed of several components: APIs server, scheduler, and controller manager. What Kamaji does is to deploy those tenant cluster components as a pod running in the admin cluster. So now we have all the stateless components of the tenant clusters control plane running as a lightweight pod instead of multiple virtual machines. We haven’t mentioned etcd, the key-value datastore keeping the state of the cluster, as we will discuss it later.

And what are the tenant worker nodes? They are just worker nodes: regular instances, e.g. virtual or bare metal, connecting to the APIs server of the tenant cluster. Our goal is to manage the lifecycle of hundreds of clusters, not only one, so how can we add another tenant cluster? As you could expect, Kamaji just deploys a new tenant control plane as a new pod in the admin cluster, and then it joins the new tenant worker nodes.

All the tenant clusters built with Kamaji are fully compliant CNCF Kubernetes clusters and are compatible with the standard toolchains everybody knows and loves.

Save the state

Putting the tenant cluster control plane in a pod is the easiest part. Also, we have to make sure each tenant cluster saves the state to be able to store and retrieve data. All the question is about where and how to deploy etcd to make it available to each tenant cluster.

Having each tenant cluster its etcd seems initially like a good idea. However, we have to take care of etcd resiliency and so deploy it with quorum replicas, with each replica having its own persistent volume. But managing data persistence in Kubernetes at scale can be cumbersome, leading to the rise of the operational costs to mitigate it.

A dedicated etcd cluster for each tenant cluster doesn’t scale well for a managed service since users are billed only according to the resources of worker nodes they consume. It means that for the service to be competitive it’s important to keep under control the resources consumed by the tenant control planes.

So we have to find an alternative keeping in mind our goal for a resilient and cost-optimized solution at the same time. As we can deploy any Kubernetes cluster with an external etcd cluster, we explored this option for the tenant control plane. On the admin cluster, we can deploy a multi-tenant etcd cluster storing the state of the tenant clusters.

With this solution, the resiliency is guaranteed by the usual etcd mechanism, and the pods count remains under control, so it solves the main goal of resiliency and costs optimization. The trade-off here is that we have to operate an external etcd cluster and manage the access to be sure that each tenant cluster uses only its data. Also, there are limits in size in etcd, defaulted to 2GB and configurable to a maximum of 8GB.

At Clastix we’re solving this issue by pooling multiple etcd and sharding the tenant control planes. First benchmarks showed that we can serve several tenant clusters with several etcd of an order of magnitude lower.

Hard Multi-tenancy

With Kamaji, cloud providers and large organizations can create a Managed Kubernetes service providing their users the same experience of Public Cloud providers in the simplest and most automated way. Tenant worker nodes are kept isolated by the infrastructure while the control planes run in different Kubernetes namespaces of the admin cluster. Network Policies and policy engines can be used to further isolate the control planes of different tenant clusters. A client should see no difference between a Kamaji built cluster and a dedicated cluster. At the end, Kamaji implements a full hard multi-tenant environment where each tenant acts as admin of his clusters and shares nothing with other tenants. We’re extremely excited about the Kamaji project as it looks promising and looking forward to the support of the community to make it even better.

About Clastix

Clastix is the leader in Kubernetes multi-tenancy solutions. Clastix products help organisations of any size to overcome Day 2 challenges and confidently run infrastructures based on Kubernetes. Clastix is the author of Capsule, an open-source operator for implementing multi-tenancy in Kubernetes, currently used in production by customers such as Fastweb, Wargaming, and many others.